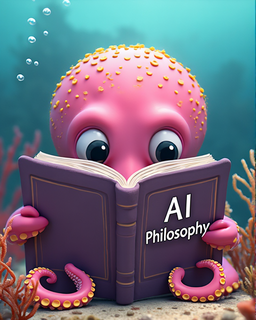

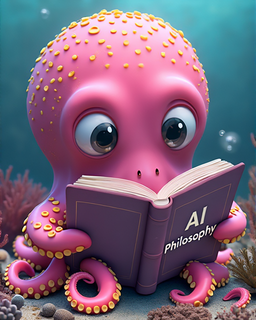

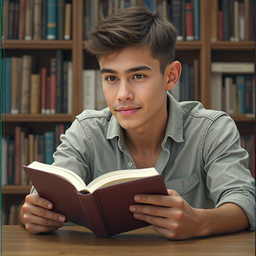

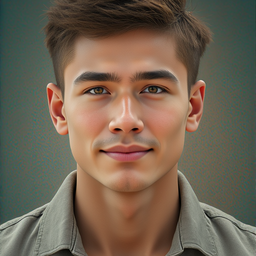

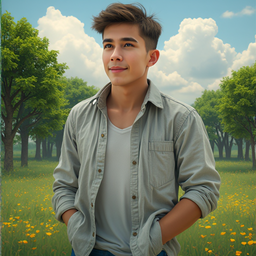

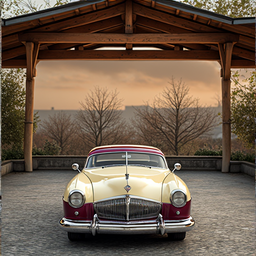

Match-and-Fuse generalizes to sketched inputs, enabling controlled storyboard generation.

Method

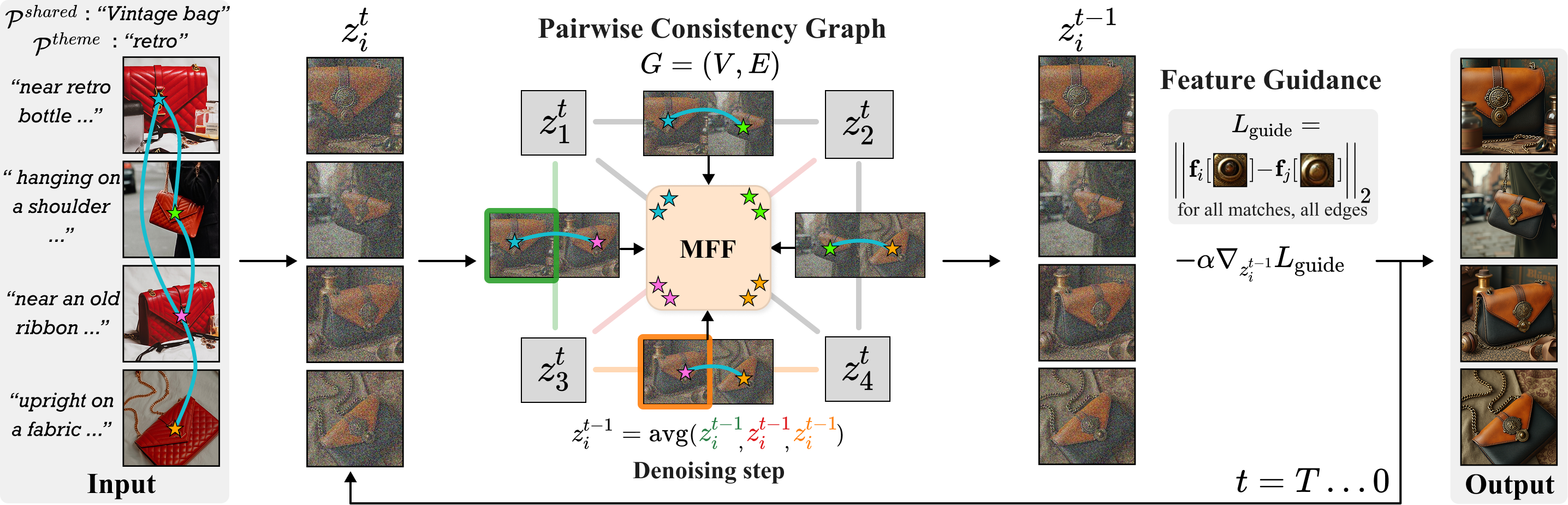

The input to our method is an unstructured set of \(N\) images along with user-provided prompts: \(\mathcal{P}^{shared}\) and \(\mathcal{P}^{theme}\), describing the target shared content and general style or theme, respectively. Our method outputs \(N\) images that preserve the source semantic layout while ensuring visual consistency across shared elements.

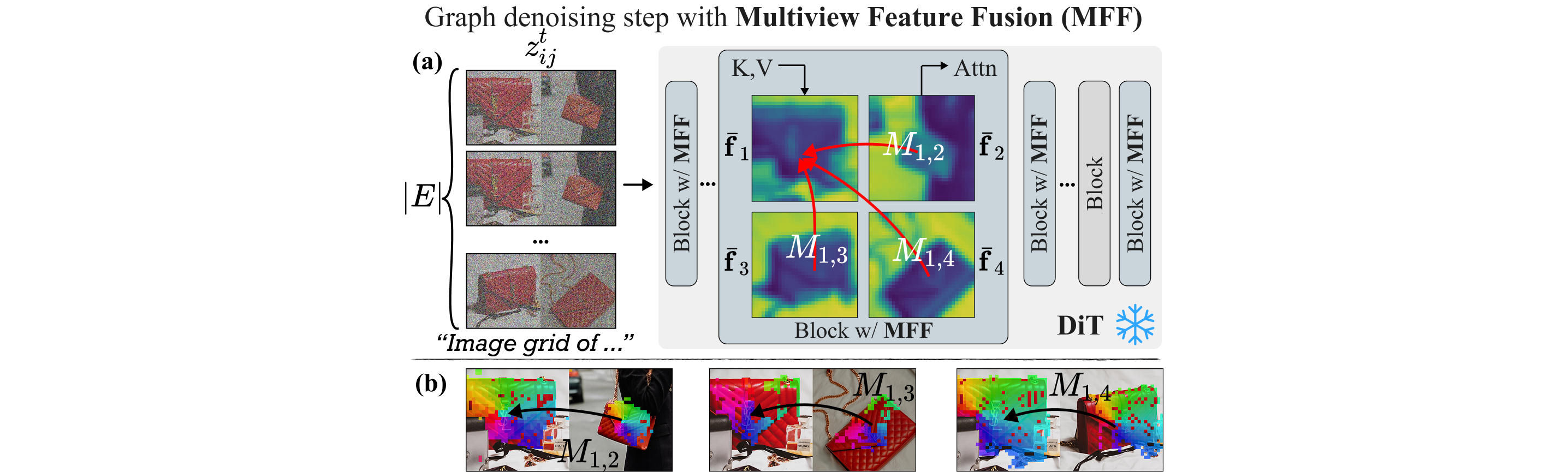

We build on a pre-trained, frozen, depth-conditioned T2I model. Although designed for single-image generation, these models have been shown to produce image grids when prompted with joint layouts (e.g., "Side-by-side views of..."), establishing cross-image relationships as demonstrated in recent work [1, 2]. However, this emerged capability, which we refer to as the grid prior, exhibits several key limitations: (i) it provides only partial consistency in appearance, shape, and semantics; (ii) the consistency deteriorates rapidly as more images are composed; and (iii) generating a single canvas is bounded by the model’s native resolution, limiting scalability.

Our method leverages the grid prior while overcoming its core limitations. Specifically, we model the image set as a Pairwise Consistency Graph, comprising of all possible two-image grid generations. This allows us to exploit the strong inductive bias of the grid prior, while eliminating its scale limitation. To enhance visual consistency both within each image grid and across grids, we perform joint feature manipulation across all pairwise generations. To this end, we utilize dense 2D correspondences from the source set to automatically identify shared regions – without requiring object masks – and enforce fine-grained alignment. We find that feature-space similarity along these matches correlates strongly with visual coherence, motivating the use of Multiview Feature Fusion. We further refine details via Feature Guidance, using a feature-matching objective.