Results

We provide the complete image sets used in Figs. 1 and 8, along with additional results.

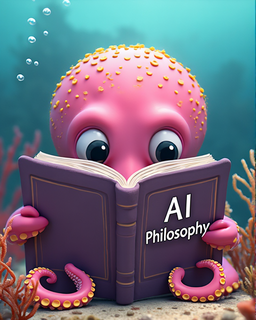

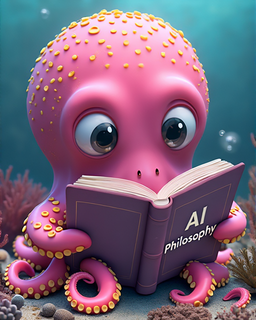

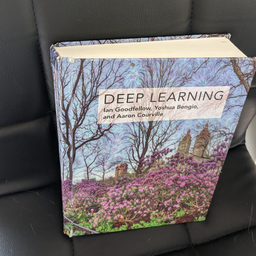

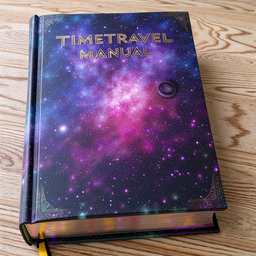

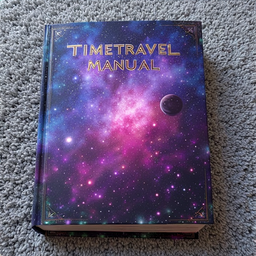

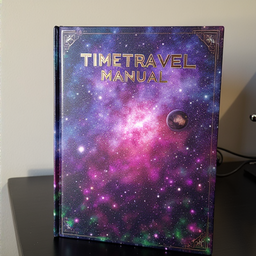

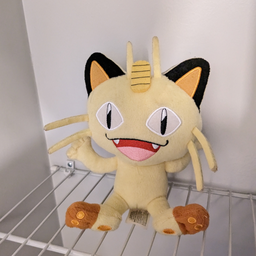

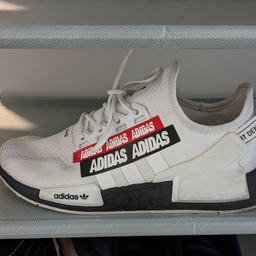

Match-and-Fuse generates consistent content of rigid and non-rigid shared elements, single and multi-subject, with shared or varying background, preserving fine-grained consistency in textures, small details, and typography. Notably, it can generate consistent long sequences.

\(\mathcal{P}^{shared}\): "A dog-shaped balloon" \(\mathcal{P}^{theme}\): "winter"

\(\mathcal{P}^{shared}\): "A dog sculpture" \(\mathcal{P}^{theme}\): "autumn"

\(\mathcal{P}^{shared}\): "Blue-white bag" \(\mathcal{P}^{theme}\): "luxury"

\(\mathcal{P}^{shared}\): "Vintage bag" \(\mathcal{P}^{theme}\): "retro"

\(\mathcal{P}^{shared}\): "A cat-shaped balloon" \(\mathcal{P}^{theme}\): "winter"

\(\mathcal{P}^{shared}\): "Extraterrestrial alien pet" \(\mathcal{P}^{theme}\): "autumn"

\(\mathcal{P}^{shared}\): "A cat knitted from a two-colored yarn" \(\mathcal{P}^{theme}\): "autumn"

\(\mathcal{P}^{shared}\): "Black-white knitted dog" \(\mathcal{P}^{theme}\): "autumn"

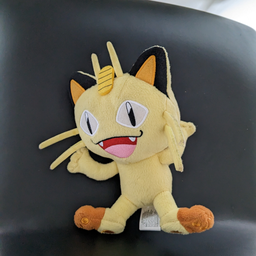

\(\mathcal{P}^{shared}\): "Cartoon characters" \(\mathcal{P}^{theme}\): "cartoon"

\(\mathcal{P}^{shared}\): "A 'Summer Potion' can" \(\mathcal{P}^{theme}\): "dreamy summer"

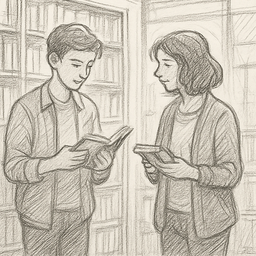

\(\mathcal{P}^{shared}\): "A man making a cocktail" \(\mathcal{P}^{theme}\): "inter-galactic kitchen"

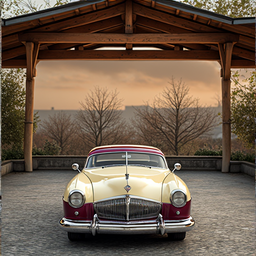

\(\mathcal{P}^{shared}\): "70s car" \(\mathcal{P}^{theme}\): "retro"

\(\mathcal{P}^{shared}\): "Claymation character in glasses" \(\mathcal{P}^{theme}\): "claymation"

\(\mathcal{P}^{shared}\): "Pixar character" \(\mathcal{P}^{theme}\): "Pixar"

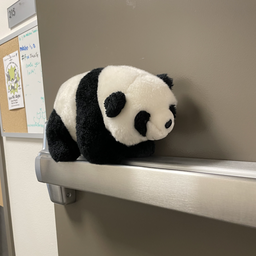

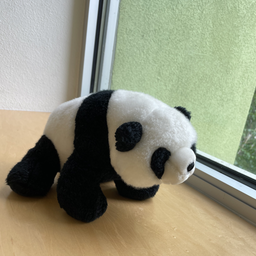

\(\mathcal{P}^{shared}\): "A real panda in a two-colored shirt" \(\mathcal{P}^{theme}\): "kindergarten"

\(\mathcal{P}^{shared}\): "A hamster in a cute costume" \(\mathcal{P}^{theme}\): "winter"

\(\mathcal{P}^{shared}\): "An exotic flower" \(\mathcal{P}^{theme}\): "jungle"

\(\mathcal{P}^{shared}\): "A flower toy" \(\mathcal{P}^{theme}\): "kids room"

\(\mathcal{P}^{shared}\): "Pixar character" \(\mathcal{P}^{theme}\): "Pixar"

\(\mathcal{P}^{shared}\): "Gingerbread house" \(\mathcal{P}^{theme}\): "gingerbread world"

\(\mathcal{P}^{shared}\): "Fine-dining desert" \(\mathcal{P}^{theme}\): "luxury restaurant"

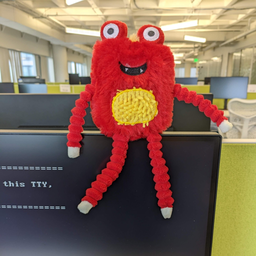

\(\mathcal{P}^{shared}\): "Graffiti-styled monster toy" \(\mathcal{P}^{theme}\): "graffiti"

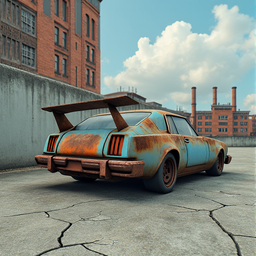

\(\mathcal{P}^{shared}\): "Rusty car" \(\mathcal{P}^{theme}\): "retro"

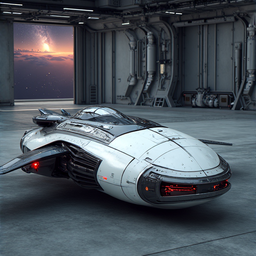

\(\mathcal{P}^{shared}\): "Space capsule" \(\mathcal{P}^{theme}\): "space"

\(\mathcal{P}^{shared}\): "Loft room" \(\mathcal{P}^{theme}\): "Loft"

\(\mathcal{P}^{shared}\): "Magical waterfall at sunset" \(\mathcal{P}^{theme}\): "sunset"

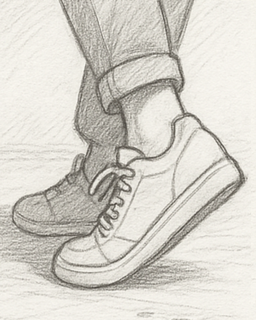

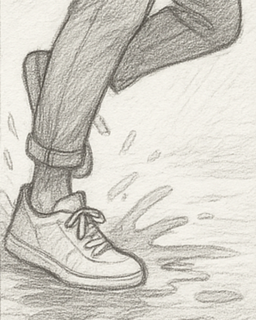

\(\mathcal{P}^{shared}\): "warm woolen slipper" \(\mathcal{P}^{theme}\): "winter"

\(\mathcal{P}^{shared}\): "Indiana Jones-themed satchel" \(\mathcal{P}^{theme}\): "jungle"

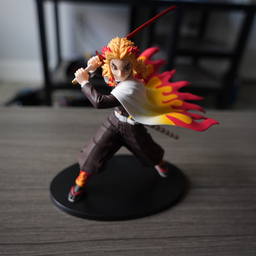

\(\mathcal{P}^{shared}\): "Figurine of a Matrix character" \(\mathcal{P}^{theme}\): "Matrix movie"

\(\mathcal{P}^{shared}\): "Rabbit from Alice in Wonderland" \(\mathcal{P}^{theme}\): "Alice in Wonderland"

\(\mathcal{P}^{shared}\): "Gummy bear with 'Cheer Up' text" \(\mathcal{P}^{theme}\): "jelly world"

\(\mathcal{P}^{shared}\): "Horror teddy bear with 'Eternal Rest' text" \(\mathcal{P}^{theme}\): "gothic horror"

\(\mathcal{P}^{shared}\): "Two-colored metal statue" \(\mathcal{P}^{theme}\): "winter"

\(\mathcal{P}^{shared}\): "Horror-style animal" \(\mathcal{P}^{theme}\): "night"

\(\mathcal{P}^{shared}\): "Cat robot" \(\mathcal{P}^{theme}\): "winter"

\(\mathcal{P}^{shared}\): "An animal knitted from a two-colored yarn" \(\mathcal{P}^{theme}\): "winter"